I had to take down the installation for this weekend's ECM concert, but I can get back to work on Sunday. Starting on Sunday, I will install a stronger light and set up the second camera for the vertical axis. From there I'll scale up to build my second wall and elaborate the sound design.

Class blog for PAT 452/552 – Interactive Media Design II – Department of Performing Arts Technology

Friday, April 15, 2016

Working Demo

For this week I put together a working demo that successfully maps gestures performed on my spandex wall to a filter of ambient noise in MaxMSP. Getting to this point required figuring out the right interaction fabric so that a user could push into it without touching the wall, setting up a provisional lighting and camera set-up, and setting up white walls behind the fabric for contrast. It also took me a while to navigate Jaime Oliver's patch and successfully route the data to MaxMSP via a network port. Here is a video documenting the progress so far, with a simple right to left gesture mapped on to a bandpass filter of some cafe noise:

I had to take down the installation for this weekend's ECM concert, but I can get back to work on Sunday. Starting on Sunday, I will install a stronger light and set up the second camera for the vertical axis. From there I'll scale up to build my second wall and elaborate the sound design.

I had to take down the installation for this weekend's ECM concert, but I can get back to work on Sunday. Starting on Sunday, I will install a stronger light and set up the second camera for the vertical axis. From there I'll scale up to build my second wall and elaborate the sound design.

Saturday, April 2, 2016

A quicky this week:

Been working hard at using water as an interface, finally made some positive steps in that direction this afternoon. The first thing is a video of a mock-up of the actual patch that uses two video sources. The real patch uses a webcam on one side, but it was hard to make the video and move the water at the same time, so I made a quick recording of the water and used that recording to show the processing. The patch takes two sources and uses the method of lumakeying to blend them. A lumakey essentially takes all the pixels within a certain value of one video and makes them transparent, thus revealing the second video behind. So here's the video of the screen shot:

And the second is a video of the result projected on to a 4x8 piece of black burlap from about ten feet away. It makes the weird masking decision on the previous video make more sense:

Been working hard at using water as an interface, finally made some positive steps in that direction this afternoon. The first thing is a video of a mock-up of the actual patch that uses two video sources. The real patch uses a webcam on one side, but it was hard to make the video and move the water at the same time, so I made a quick recording of the water and used that recording to show the processing. The patch takes two sources and uses the method of lumakeying to blend them. A lumakey essentially takes all the pixels within a certain value of one video and makes them transparent, thus revealing the second video behind. So here's the video of the screen shot:

And the second is a video of the result projected on to a 4x8 piece of black burlap from about ten feet away. It makes the weird masking decision on the previous video make more sense:

Friday, March 25, 2016

Embodiment and Meaning

The point of my blog post today is to signal my thinking about how embodied interactions produce meaning for a interactive art work. In particular, I was really inspired by Dourish's discussion of meaning and phenomenology that was referenced by Dalsgaard and Hansen in their "Performing Perception paper." Dalsgaard and Hansen summarize Dourish's point as follows: “…we can however deconstruct the systemic

concept of embodiment to gain an understanding of some of the tensions between

user and system. First and foremost, it

is a relationship characterized by the user’s exploration of the meaning of the

system…Second, meaning is not a constant, rather arises from the user’s interaction

with the system…this implies that one cannot control what the system means to

the user, only influence the construction of meaning” (Dalsgaard, 5).

I looked up Dourish's 2001 paper "Seeking a foundation for context-aware computing" and found this memorable quote that provides a theoretical ground for the construction of meaning through action, paraphrasing Heidegger essentially: “it is through our actions in the world—through the ways in which we move through the world, react to it, turn it to our needs, and engage with it to solve problems—that the meaning that the world has for us is revealed…action precedes theory; the way we act in the world is logically prior to the way we understand it.” (Dourish, 6)

This theory leaves open the possibility of multiple and evolving meanings for a piece of interactive art. This would be most true if we are fortunate to have a community form around a piece of art, as Dourish argues: “if the meaning of use of technology is…something that is worked out again and again in each setting, then the technology needs to be able to support this sort of repurposing, and needs to be able to support the communication of meaning through it, within a community of practice.” (Dourish, 12).

I looked up Dourish's 2001 paper "Seeking a foundation for context-aware computing" and found this memorable quote that provides a theoretical ground for the construction of meaning through action, paraphrasing Heidegger essentially: “it is through our actions in the world—through the ways in which we move through the world, react to it, turn it to our needs, and engage with it to solve problems—that the meaning that the world has for us is revealed…action precedes theory; the way we act in the world is logically prior to the way we understand it.” (Dourish, 6)

This theory leaves open the possibility of multiple and evolving meanings for a piece of interactive art. This would be most true if we are fortunate to have a community form around a piece of art, as Dourish argues: “if the meaning of use of technology is…something that is worked out again and again in each setting, then the technology needs to be able to support this sort of repurposing, and needs to be able to support the communication of meaning through it, within a community of practice.” (Dourish, 12).

Saturday, March 19, 2016

The Humidity of Emotion -or- That Feel

At the risk of stretching myself too thin, I've decided to take on an extra layer for the upcoming installation project. This class is at it's core about interactive design; our charge is to conceive of and execute robust interaction frameworks. This involves, but is not limited to, ease of entry (meaning little or no explication of the rules of the interaction), richness of recognized gestures or interaction possibilities, feedback from the installation that both maps to the gesture intuitively yet is also dense enough to maintain the interest of the participant, seamless technical execution, an error handling framework for unexpected input (following Benford et al in the paper "Sensed Expected Desired"), and many more. While this is more than enough to fill my plate for the next five (!) weeks or so, I also feel compelled to explore another layer that is not the main focus of (but by no means ignored in) this class: that of a compelling aesthetic experience causing an emotional connection with the audience.

I will by no means claim any sort of authority on the width and breadth of interactive artwork, but I have seen a fair share, some good, some bad. Many, and especially those that harbor a large amount of digital hardware and software, often have an antiseptic feel to them, a sleek presentation that shares an aesthetic with the design of all of our technical hardware. In a discussion with Dr. Gurevich about this, he suggested that many of the works are still conceived of in the vein of the Bauhaus, Late Modernism and Minimalism, with their rejection of of superfluity and unnecessary ornament. I am by no means rejecting this aesthetic, this is not a manifesto and I shy away from categorical imperatives; I am just curious if a more emotive framework can be found for a digital interaction. While many works are able to instill a sense of wonder with beautiful lights, surprising interactions and technical sophistry, or conversely deep disquiet with dark imagery, it's rare, at least for me, to find a digital work that can, or attempts, to achieve more subtle explorations of emotion. Is there a path to Nostalgia, to Ennui, to Anticipation, to Longing, or to the vast sea of feelings that are as of yet unnamed, only evoked through either the actual experience or the non-verbal forms of communication? C.J. Ducasse in a 1964 article in the Journal of Aesthetics and Art Criticism puts it well:

I was reading on the basic psychological aspects of a viewers emotions in relation to art when I came across an article by Vladimir J. Konečni of the Department of Psychology at University of California San Diego (Emotion in Painting and Art Installations, The American Journal of Psychology). The article was about how, overall, installation art was a better vehicle for instilling an emotional reaction in a viewer than traditional painting. Most of his conclusion boiled down to how installation work can be made very large and thus short circuit its way to inducing awe. A line early in the paper caught my eye:

Svankmajer is a Czech filmaker working from Prague most active in the mid to late Communist era. He is most known for his surrealist stop action animation made on a shoe string budget with found objects. The Brothers Quay, twin brothers from the U.S. but located in England, created a similar style, though they came to Svankmajer late as his works were hidden behind the Iron Curtain. They made a tribute film to Svankmajer called The Cabinet of Jan Svankmajer Here are some examples of their work:

A Svankmajer whimsy:

Jan Svankmajer - Jabberwocky from deanna t on Vimeo.

And an excerpt from the Brothers Quay:

These works have an emotional resonance for me not just because they are technical feats, or the peculiar medium that they choose to work in, but because they are rich with sign; obviously they are in direct succession of the surrealists for whom subconscious sign was a stock in trade. The reuse of weathered detritus, the juxtaposition of objects and actions not normally related, the bizarre narrative, the surreal settings; all these combine to create an atmosphere 'humid' with sign just waiting for for a subject to condense on. Just like the water suspended in humid air, the condensation is indiscriminate because it is pervasive; a cold spoon, a glass of ice water, a window, anything just cool enough will cause the water to appear out of the air and saturate it's surface. To follow the metaphor, as long as one is willing to accept the indiscriminate condensation as the desired affect and not simply targeting one 'object' (feeling) to saturate, then creating an atmosphere that can have emotional resonance across a variety of subjective viewpoints is possible.

This is dangerous territory. Just like pointing at a certain feeling can backfire, creating an overbearing overall environment can cause a viewer to shut off due to too much stimulus; furthermore just collecting a heap of sign laden imagery and cramming it into a container risks becoming kitsch. The collection must be delicately curated or the 'dew point' of the whole enterprise drops and the water falls out of the air.

Then there are the requirements for this particular situation. This is an exercise in interaction, requiring and active as opposed to a passive audience. It would do no good to do the curation of sign and then simply give the participant a push button interface; this would also break the atmosphere, drop the dew point. The interaction must be interwoven into the atmosphere, inform it, work seamlessly within its confines. This brings me to the question that is the whole point of this endeavor. Our subconscious is activated by our different senses in a myriad of ways with more or less efficacy; the smell of rotting leaves, the mournful wail of a saxophone, the taste of a childhood dish, the quality of bright sunlight of a late winter afternoon, etc. Sight, smell, hearing and taste seem to have a direct line to the subconscious, cutting across our years and ringing out multiple associations in their path, creating a complex and unrepeatable tone of feeling with each pass. But what of touch? What of the tactile? What of gesture? There is something so immediate about touch that it seems to defy these subconscious associations; I'm racking my brain trying to think of a nostalgic gesture, of a texture that sends me into reverie, the touch equivalent of Proust's Madeline. This would be the gold standard of Interactive Design; not just interacting in a sign saturated atmosphere but having a physical interaction saturated with sign. This is my big lift for the upcoming project and it may very well turn out Quixotic. I'll leave the conclusion to one of our times great tradesman of sign, who once in an interview described himself as less of a musician and more of a curator:

I will by no means claim any sort of authority on the width and breadth of interactive artwork, but I have seen a fair share, some good, some bad. Many, and especially those that harbor a large amount of digital hardware and software, often have an antiseptic feel to them, a sleek presentation that shares an aesthetic with the design of all of our technical hardware. In a discussion with Dr. Gurevich about this, he suggested that many of the works are still conceived of in the vein of the Bauhaus, Late Modernism and Minimalism, with their rejection of of superfluity and unnecessary ornament. I am by no means rejecting this aesthetic, this is not a manifesto and I shy away from categorical imperatives; I am just curious if a more emotive framework can be found for a digital interaction. While many works are able to instill a sense of wonder with beautiful lights, surprising interactions and technical sophistry, or conversely deep disquiet with dark imagery, it's rare, at least for me, to find a digital work that can, or attempts, to achieve more subtle explorations of emotion. Is there a path to Nostalgia, to Ennui, to Anticipation, to Longing, or to the vast sea of feelings that are as of yet unnamed, only evoked through either the actual experience or the non-verbal forms of communication? C.J. Ducasse in a 1964 article in the Journal of Aesthetics and Art Criticism puts it well:

"The fact is that human beings experience, and the works of art and indeed works of nature too express, many feelings besides the ordinarily thought of when the term the emotions is used. These other feelings are too rare, or too fleeting, or too unmanifested, or their nuances too subtle, to have pragmatic importance and therefore to have needed names."So to what ends do I pursue in search of these subtle shades? It, of course, ultimately breaks down to 'that feel', the artistic judgment of the worth of an aesthetic, of whether or not is the correct vehicle for the feeling that I would attempt to invoke. In other disciplines, the access to that feel can be a much clearer pathway; there are hundreds, even thousands, of years of practice and tropes to follow or flee from. In the realm of digital interactive art, we barely have fifty or sixty years, maybe with a hundred years of antecedents; we have no history from which to draw or to be ashamed. So this has lead me down some curious pathways; as any place is as good to start as any other, I decided to start at what I though was the beginning.

I was reading on the basic psychological aspects of a viewers emotions in relation to art when I came across an article by Vladimir J. Konečni of the Department of Psychology at University of California San Diego (Emotion in Painting and Art Installations, The American Journal of Psychology). The article was about how, overall, installation art was a better vehicle for instilling an emotional reaction in a viewer than traditional painting. Most of his conclusion boiled down to how installation work can be made very large and thus short circuit its way to inducing awe. A line early in the paper caught my eye:

For an examination of their potential effect on emotion to make analytic sense, it is necessary that paintings be considered solely qua artworks – that for any observed effect to be treated as positive evidence, it needs to have been clearly caused by the paintings’ artistic attributes alone and not by their status as semiotic signs. An example isSo, setting aside the notion of 'solely qua artworks' and their utility to psychology (and I may quibble with his conclusion a bit—there is a Rothke at the Detroit Institute of Arts that still flabbergasts me every time I sit with it), I explored the idea of the 'semiotic signs' of the artwork. For Konečni this 'taint' of external meaning of the artwork made for a bad sample set for the sake of psychological research, but it could prove rich loam in my pursuit. Representational painting of course needs a subject, and I believe that a painter rarely chooses a subject solely based on the underlying set of signs and assumptions that portraying it would convey; being married to a painter I know it is an esoteric mix of quality of light and color that really catches their imaginations. Even if there is a conscious underlying meaning, like, for example, Picasso's Les Demoiselles d'Avignon, it is done more for immediate commentary or effect; the emotional signs, the unnamed feelings evoked are subjectively imbued into the painting by the individual viewer much later, and often to the distress of the artist. Furthermore, the deliberate invocation of a specific sign pointed at a specific feeling is ham-fisted, coercive, and inevitably bound to backfire because of the ultimately subjective nature of feeling. But I believe that it is possible, especially in a medium as flexible as interaction, to create a 'saturated atmosphere of sign'. No mean feat, I'm sure, but possible; I believe the place to look to for inspiration is film because it shares a similar combination of abilities in combinations of sound and light. A particular continuum of filmmakers comes to mind when I think of the aesthetic I would like to pursue; that of Jan Svankmajer and his immediate heirs, the Brothers Quay.

a portrait of a loved person, no longer living. Perusing such a painting, one may become genuinely sad, which, however, may have little or nothing to do with the painting’s artistic or aesthetic value, or even mimetic success. The painting does not induce emotion as a work of art, but as a displaced or generalized classically conditioned stimulus.

Svankmajer is a Czech filmaker working from Prague most active in the mid to late Communist era. He is most known for his surrealist stop action animation made on a shoe string budget with found objects. The Brothers Quay, twin brothers from the U.S. but located in England, created a similar style, though they came to Svankmajer late as his works were hidden behind the Iron Curtain. They made a tribute film to Svankmajer called The Cabinet of Jan Svankmajer Here are some examples of their work:

A Svankmajer whimsy:

Jan Svankmajer - Jabberwocky from deanna t on Vimeo.

And an excerpt from the Brothers Quay:

These works have an emotional resonance for me not just because they are technical feats, or the peculiar medium that they choose to work in, but because they are rich with sign; obviously they are in direct succession of the surrealists for whom subconscious sign was a stock in trade. The reuse of weathered detritus, the juxtaposition of objects and actions not normally related, the bizarre narrative, the surreal settings; all these combine to create an atmosphere 'humid' with sign just waiting for for a subject to condense on. Just like the water suspended in humid air, the condensation is indiscriminate because it is pervasive; a cold spoon, a glass of ice water, a window, anything just cool enough will cause the water to appear out of the air and saturate it's surface. To follow the metaphor, as long as one is willing to accept the indiscriminate condensation as the desired affect and not simply targeting one 'object' (feeling) to saturate, then creating an atmosphere that can have emotional resonance across a variety of subjective viewpoints is possible.

This is dangerous territory. Just like pointing at a certain feeling can backfire, creating an overbearing overall environment can cause a viewer to shut off due to too much stimulus; furthermore just collecting a heap of sign laden imagery and cramming it into a container risks becoming kitsch. The collection must be delicately curated or the 'dew point' of the whole enterprise drops and the water falls out of the air.

Then there are the requirements for this particular situation. This is an exercise in interaction, requiring and active as opposed to a passive audience. It would do no good to do the curation of sign and then simply give the participant a push button interface; this would also break the atmosphere, drop the dew point. The interaction must be interwoven into the atmosphere, inform it, work seamlessly within its confines. This brings me to the question that is the whole point of this endeavor. Our subconscious is activated by our different senses in a myriad of ways with more or less efficacy; the smell of rotting leaves, the mournful wail of a saxophone, the taste of a childhood dish, the quality of bright sunlight of a late winter afternoon, etc. Sight, smell, hearing and taste seem to have a direct line to the subconscious, cutting across our years and ringing out multiple associations in their path, creating a complex and unrepeatable tone of feeling with each pass. But what of touch? What of the tactile? What of gesture? There is something so immediate about touch that it seems to defy these subconscious associations; I'm racking my brain trying to think of a nostalgic gesture, of a texture that sends me into reverie, the touch equivalent of Proust's Madeline. This would be the gold standard of Interactive Design; not just interacting in a sign saturated atmosphere but having a physical interaction saturated with sign. This is my big lift for the upcoming project and it may very well turn out Quixotic. I'll leave the conclusion to one of our times great tradesman of sign, who once in an interview described himself as less of a musician and more of a curator:

Thursday, March 17, 2016

Space - Installation I

As we begin our installation projects, I've become very preoccupied with this idea of space and concept. Of course, as interactive sound/visual/music/media/experiential/whatever-label-you-want-to-put-on-it artists it's hard to be not preoccupied with space. This is obviously coming from a rich tradition of artists such as Lucier and Cage who were focused on the concept of space and related ideas. But when I was coming up with initial ideas for this installation, I noticed that my ideas could more or less be sorted into two categories: object-based installation, and space-based installation. An example of object based installation was my balanced-spatialization cube, where the spinning of the cube controlled amplitude and sonic placement. An example of space-based installation was this idea of hanging sheets, or swaths of cloth in Davis--where the entire room/environment is involved in the installation. I'm definitely leaning more towards space-based installation at the moment, and have a couple of ideas that I'm currently running with an trying to elaborate on.

So as I think about how I want to transform a space into an installation or (better word in my opinion) experience, I've been kind of struggling with how to properly infuse concept so that the interaction itself is meaning. So that my use of the space has meaning, and I'm utilizing the space to best of the installation's purposes. The space should be an integral part of the installation, not only in terms of interaction but also for meaning. As I try to elaborate on these space-based installation ideas, I'm trying to keep in mind concept and meaning. Because of this, I've been doing a lot of "outside" (not-for-school) reading, exploring, word association, etc. to try and find ways of making space-object-interaction connect in a meaningful manner. I think I'm getting there slowly, but when you're in the steady pound that is school, it can be hard to remember that taking time to ruminate and reflect is still a valuable part of the development of not only my project, but my artistic identity.

A few gallery works/installations that I'm feeling inspired by:

Shaping Shapce: 17 Screens by Ronan and Erwan Bouroullec

Not an interactive piece in the way that we envision interaction, but still interesting in terms of shape/material/concept.

Unfolding - Kimsooja

I saw this exhibit in Vancouver a couple of years ago, and there was one room in particular that stuck out to me. It was a large room, and there was just hanging Korean-cultural blankets. And I have a very vivid memory of weaving my way through the rows and rows of blankets--all incredibly colorful, and I have a vaguer memory of different smells in that space. There's something about the hazy memory of a hazy memory; identity lost and found in those spaces; cultural impact; mixed-cultural identities; remembering something that was but is no longer...

Just things I'm thinking about...

So as I think about how I want to transform a space into an installation or (better word in my opinion) experience, I've been kind of struggling with how to properly infuse concept so that the interaction itself is meaning. So that my use of the space has meaning, and I'm utilizing the space to best of the installation's purposes. The space should be an integral part of the installation, not only in terms of interaction but also for meaning. As I try to elaborate on these space-based installation ideas, I'm trying to keep in mind concept and meaning. Because of this, I've been doing a lot of "outside" (not-for-school) reading, exploring, word association, etc. to try and find ways of making space-object-interaction connect in a meaningful manner. I think I'm getting there slowly, but when you're in the steady pound that is school, it can be hard to remember that taking time to ruminate and reflect is still a valuable part of the development of not only my project, but my artistic identity.

A few gallery works/installations that I'm feeling inspired by:

Shaping Shapce: 17 Screens by Ronan and Erwan Bouroullec

Not an interactive piece in the way that we envision interaction, but still interesting in terms of shape/material/concept.

Unfolding - Kimsooja

I saw this exhibit in Vancouver a couple of years ago, and there was one room in particular that stuck out to me. It was a large room, and there was just hanging Korean-cultural blankets. And I have a very vivid memory of weaving my way through the rows and rows of blankets--all incredibly colorful, and I have a vaguer memory of different smells in that space. There's something about the hazy memory of a hazy memory; identity lost and found in those spaces; cultural impact; mixed-cultural identities; remembering something that was but is no longer...

Just things I'm thinking about...

The Luminator

And here is my final instrument! I thought it'd be good to have up on here for the courses's documentation purposes. Light-based electroacoustic instrument--you can check out all the fun details at http://fidelialam.com/the-luminator

Friday, February 26, 2016

Room Prep for Ambisonics, or the Many Ways To Bake a Pi

In further preparation for an ambisonic performance in the Davis studio, I undertook a fairly exacting survey of the speaker positions in the room in order to use the information to fine tune the ambisonic 'sweet spot'. This is a short description of my methods and results.

One thing I have learned implementing this project is how to translate between various 'azimuth' orientations depending on which coordinate system and compass orientation each system uses. For example, my sensor gives out Euler angles in relation to gravity and magnetic north; on the other hand the ambisonic library's map function uses compass agnostic Cartesian coordinates or Polar coordinates. The Cartesian coordinates of a sound position have to be extrapolated from the angles sent by the sensor using various trig manipulations. Or if I choose to go polar coordinates, 0 degrees is to the right side of the graph on the 'x' axis. This presents confusion when orienting the project because on a magnetic compass 0 degrees is north, which happens to be the 'back' wall of the Davis studio. Furthermore, the polar system doesn't operate in degrees, but in Radians, so a translation has to be made there as well. Lastly, with the 'front' of house being due south, the speaker numbering system starts with the center speaker over the top of the projector screen.

For Ambisonics, the Davis studio presents an 'irregular' (though mildly so) loudspeaker arrangement. Ideally for Ambisonics, a evenly spaced circular array of speakers is ideal, but there are compensations written because this is rarely the case in real world situations. The authors of the library I am using described their method in this paper from the 2014 ICMC. Their description of the solution is "...we implement an algorithm that combines Ambisonic decoding and standard panning to offset the missing loudspeakers. With this technique we can go up to any high order and adapt the decoding to many loudspeaker configurations. We made tests for stereophonics, quadraphonic, 5.1 and 7.1 loudspeaker systems and other more eclectic configurations at several decomposition orders with good perceptual results."

The practical implementation in the library for irregular speaker arrangements takes the number of speakers and the progressive angle of the speakers and recalcualtes the decoding as described above. Here is a screen shot of the help file:

I have two goals going forward for this. With the relationship of the speakers to the center of room now established, I'm going to go around and see, and maybe adjust, the angle of address of the speakers to tune them to the center of the room. I also intend to double check these measurements and then prepare them into a detailed picture that can be kept on hand for further work and learning in the Davis Studio.

BREAAAK!

One thing I have learned implementing this project is how to translate between various 'azimuth' orientations depending on which coordinate system and compass orientation each system uses. For example, my sensor gives out Euler angles in relation to gravity and magnetic north; on the other hand the ambisonic library's map function uses compass agnostic Cartesian coordinates or Polar coordinates. The Cartesian coordinates of a sound position have to be extrapolated from the angles sent by the sensor using various trig manipulations. Or if I choose to go polar coordinates, 0 degrees is to the right side of the graph on the 'x' axis. This presents confusion when orienting the project because on a magnetic compass 0 degrees is north, which happens to be the 'back' wall of the Davis studio. Furthermore, the polar system doesn't operate in degrees, but in Radians, so a translation has to be made there as well. Lastly, with the 'front' of house being due south, the speaker numbering system starts with the center speaker over the top of the projector screen.

For Ambisonics, the Davis studio presents an 'irregular' (though mildly so) loudspeaker arrangement. Ideally for Ambisonics, a evenly spaced circular array of speakers is ideal, but there are compensations written because this is rarely the case in real world situations. The authors of the library I am using described their method in this paper from the 2014 ICMC. Their description of the solution is "...we implement an algorithm that combines Ambisonic decoding and standard panning to offset the missing loudspeakers. With this technique we can go up to any high order and adapt the decoding to many loudspeaker configurations. We made tests for stereophonics, quadraphonic, 5.1 and 7.1 loudspeaker systems and other more eclectic configurations at several decomposition orders with good perceptual results."

The practical implementation in the library for irregular speaker arrangements takes the number of speakers and the progressive angle of the speakers and recalcualtes the decoding as described above. Here is a screen shot of the help file:

You can see to the right of the main objects some umenus for channels and angles. Also, inside the hoa.2d.decoder~ you can see flags for mode irregular, channels and angles. I haven't implemented this yet, but it looks from the help file that the array starts at the top of the circle and progresses around clockwise in a compass style, as opposed to a polar radian or cartesian -pi,pi arrangement. (the optim~ object above the decoder object is also important for irregular arrangements, it's utility is also addressed in the paper.)

So all that is left to do is to find out the angles of the speakers in relation to the room. I did this first by establishing the very center of the room. I marked this with masking tape and in the future it may be valuable to actually add a permanent paint spike at the spot. After that I went around the room with the laser ruler and took the distance from the cone of the speakers to the north and the west walls. I then subtracted those numbers from the wall to center of room distances to position them in an x-y relationship with the center of the room I then did the operation arctan(opposite/adjacent) to get the angle measurements of all the speakers in relation to the 0 degree line represented as the line between the center of the room and the center speaker over the screen. As the room is fairly symmetrical over that line, I assumed the east side of the room to have roughly the same measurements as the west side of the room. Although it isn't needed for Ambisonics, I also ran the numbers through Pythagoras to get the distance from center of room to the speaker cone for future reference; I believe VBAP likes to have that number.

Here's the results:

- 0 degrees, 15' 2'

- 68.7 deg, 15.65' ( and 7)

- 113 deg, 15.6' (and 6)

- 161 deg, 15.1' (and 5)

I have two goals going forward for this. With the relationship of the speakers to the center of room now established, I'm going to go around and see, and maybe adjust, the angle of address of the speakers to tune them to the center of the room. I also intend to double check these measurements and then prepare them into a detailed picture that can be kept on hand for further work and learning in the Davis Studio.

BREAAAK!

Thursday, February 25, 2016

We have a spinning wheel, baby!!!!!!

So I constructed the first wheel. Learned a lot about steel pipe, galvanized pipe, stainless steel, the art school. Lots of things you can and cannot do in these areas. But I sucessfully created a base, leg and arm for the spinning wheel. The bearings I ordered didnt come in yet, but i think its spinning well enough with my new end caps. So I am going to see how those last.

Next is to see what kind of light data spinning wheels even give a photocell!!! Hopefully something!!!

Next is to see what kind of light data spinning wheels even give a photocell!!! Hopefully something!!!

Saturday, February 20, 2016

Ambisonics

I've still got a little bit of build to go, but I'm focusing on the sound creation this week. I think my project can be used in a variety of ways, but one of my biggest interests is the spatialization of sound, recreating 3D sound environments and manipulating them; I think my project lends itself very well to this application. The nature of the interaction with the Bucky is hemispherical, with the round outer edge easily translating to the horizon and the various motions back and forth from the edge mapping nicely to an overhead dome. The data that comes from the onboard ITU maps fairly easily to the coordinates of a virtual soundfield as I showed in my previous post. The next step is figuring out how to implement the virtual soundfield. I had already had a specific library picked out in MaxMSP, but in a conversation Dr. Gurevich brought up a competing implementation, so I thought I should take a look at the two for the sake of thoroughness.

The method I was planning on using is one that I have used before, but only in a binaural (headphone) implementation; that is the method known as Ambisonics. The other method is a slightly newer system called Vector Based Amplitude Panning (VBAP). They both have their strengths and weaknesses and I'll try and survey a few of them here. The VBAP system was developed by Ville Pulkki who I believe is based in Helsinki. Through my very cursory research VBAP seems like an approach based on the traditional stereo field panning concept, where the ratio of the intensity of sound coming out of two speakers gives the perception that the sound is actually somewhere in between the two speakers. VBAP is a system that allows you to extend this system out to any number of loudspeakers. Using vector based mathematics to set the relative position of the sound and the combining it in a matrix with the loudspeaker position and distances, a convincing, easy to manipulate virtual soundfield can be created. Like the stereo system, it uses ratios of intensity to position the sound, but instead of a stereo pair, it uses stereo triplets, with loudspeakers above the plane, to give elevation information. It also smooths the transference of sound from one set of triplets to the adjacent triplets in order to involve the full range of motion.

Ambisonics, on the other hand, is much less intuitive 'under the hood' but instead uses some very cool psychoacoustics that I just barely understand to achieve the spatialization effect. It's an expansion of Alan Blumlein's invention, the Mid-Side microphone technique. Instead of the VBAP technique of actual localization of the sound in a triplet of loudspeakers, the position information is encoded in and emanates from ALL of the loudspeakers through a system of phase cancellation and correlation (I think...even after reading it a hundred times it still seems like magic to me. Probably why I'm a sound lover, this stuff just fascinates me). Ambisonics necessitates encoding and decoding stages on either side of the position determination, which can be processor intensive; this is one of the reasons that 5.1 Surround has surpassed Ambisonics for surround sound in consumer electronics. Up until recently the processing power needed put the decoder price point way out of range for anyone but enthusiasts.

The two systems, as I understand it, produce a relatively similar result, so the differentiation is in the implementation. The usage of one or the other may be dependent on the situation, one better for permanent installation and the other for undetermined performance spaces. I don't have enough experience to make that call. The VBAP system necessitates entering speaker position and distance from the 'listener', and so is a little more difficult in initial setup. Ambisonics, as far as I can tell, is more speaker position 'agnostic' (at least within reason) making setup much easier and allowing for a variety of performance settings. The problem with Ambisonics is that it has a much tighter 'sweet spot' (though I understand that it is getting much wider as decoding speeds up and more thorough HRTFs are implemented) and there are certain perceived phasing artifacts if the listener moves their head to quickly in the sweet spot.

There are libraries for both methods readily available for MaxMSP. On the VBAP side, the library is written by the methods inventor, Pulkki, and he has a paper on the implementation here. On the Ambisonic side, there are a few libraries out there. This page at Cycling 74 has a couple of the proven ones, including the High Order Ambisonics (HOA) library from CICM which I have used before.

I think I'm probably still going to go with my initial instinct and use Ambisonics. I would like to give a well thought out reason for this, but it's mostly based on my feeling that Ambisonics is just to cool of a psychoacoustic phenomenon not to play with. Also, the HOA library implementation is very advanced, including objects that aid in the connecting of the thousand patch lines inherent in spatialization.

The method I was planning on using is one that I have used before, but only in a binaural (headphone) implementation; that is the method known as Ambisonics. The other method is a slightly newer system called Vector Based Amplitude Panning (VBAP). They both have their strengths and weaknesses and I'll try and survey a few of them here. The VBAP system was developed by Ville Pulkki who I believe is based in Helsinki. Through my very cursory research VBAP seems like an approach based on the traditional stereo field panning concept, where the ratio of the intensity of sound coming out of two speakers gives the perception that the sound is actually somewhere in between the two speakers. VBAP is a system that allows you to extend this system out to any number of loudspeakers. Using vector based mathematics to set the relative position of the sound and the combining it in a matrix with the loudspeaker position and distances, a convincing, easy to manipulate virtual soundfield can be created. Like the stereo system, it uses ratios of intensity to position the sound, but instead of a stereo pair, it uses stereo triplets, with loudspeakers above the plane, to give elevation information. It also smooths the transference of sound from one set of triplets to the adjacent triplets in order to involve the full range of motion.

Ambisonics, on the other hand, is much less intuitive 'under the hood' but instead uses some very cool psychoacoustics that I just barely understand to achieve the spatialization effect. It's an expansion of Alan Blumlein's invention, the Mid-Side microphone technique. Instead of the VBAP technique of actual localization of the sound in a triplet of loudspeakers, the position information is encoded in and emanates from ALL of the loudspeakers through a system of phase cancellation and correlation (I think...even after reading it a hundred times it still seems like magic to me. Probably why I'm a sound lover, this stuff just fascinates me). Ambisonics necessitates encoding and decoding stages on either side of the position determination, which can be processor intensive; this is one of the reasons that 5.1 Surround has surpassed Ambisonics for surround sound in consumer electronics. Up until recently the processing power needed put the decoder price point way out of range for anyone but enthusiasts.

The two systems, as I understand it, produce a relatively similar result, so the differentiation is in the implementation. The usage of one or the other may be dependent on the situation, one better for permanent installation and the other for undetermined performance spaces. I don't have enough experience to make that call. The VBAP system necessitates entering speaker position and distance from the 'listener', and so is a little more difficult in initial setup. Ambisonics, as far as I can tell, is more speaker position 'agnostic' (at least within reason) making setup much easier and allowing for a variety of performance settings. The problem with Ambisonics is that it has a much tighter 'sweet spot' (though I understand that it is getting much wider as decoding speeds up and more thorough HRTFs are implemented) and there are certain perceived phasing artifacts if the listener moves their head to quickly in the sweet spot.

There are libraries for both methods readily available for MaxMSP. On the VBAP side, the library is written by the methods inventor, Pulkki, and he has a paper on the implementation here. On the Ambisonic side, there are a few libraries out there. This page at Cycling 74 has a couple of the proven ones, including the High Order Ambisonics (HOA) library from CICM which I have used before.

I think I'm probably still going to go with my initial instinct and use Ambisonics. I would like to give a well thought out reason for this, but it's mostly based on my feeling that Ambisonics is just to cool of a psychoacoustic phenomenon not to play with. Also, the HOA library implementation is very advanced, including objects that aid in the connecting of the thousand patch lines inherent in spatialization.

Friday, February 19, 2016

Max Patch for Water Glass

I've spent this week figuring out how to get the serial data from the arduino side of water glass to communicate with a Max Patch where I can map audio effects onto different gestures. I found a few versions of a Max Patch that could communicate with the touche sensor online, most helpfully from madlabk, but it took quite a few hours of debugging to work through his code and adapt it to my purposes. Now I have a working max patch that has a training algorithm to recognize gestures, with a debouncing function!

I realized there won't be a way train the patch to recognize gestures away from the stage of the performance, because the sensor can't move too much between the backstage and where I will perform or else the readings it stores will not be valid. Therefore I will have to train the gestures on stage, akin to how a string player tunes on stage. I'm thinking now about how to incorporate this process, and my interaction with the laptop in general, into the performative arc of the piece, which may be a useful question for everyone else!

I realized there won't be a way train the patch to recognize gestures away from the stage of the performance, because the sensor can't move too much between the backstage and where I will perform or else the readings it stores will not be valid. Therefore I will have to train the gestures on stage, akin to how a string player tunes on stage. I'm thinking now about how to incorporate this process, and my interaction with the laptop in general, into the performative arc of the piece, which may be a useful question for everyone else!

Monday, February 15, 2016

Monochord / Instillation Idea?

At the beginning of the semester, I was looking across new instruments (new to me) and came across the monochord. Although smaller monochords do exist, I am most intrigued by the large ones.

The beauty of the monochord is how easy it is play. This goes against what I'm trying to build as an instrument for my current project, but has me already thinking ahead to how I could apply a certain aspect of this to some kind of instillation. Though I do not necessarily want to work with the idea of strings (even though that is a possibility), I would really like to build something like this: Its function is apparent, its easy to play, and the simple drone-like sound is extremely soothing. I think bringing those aspects to an interactive instillation would be extremely interesting.

Saturday, February 13, 2016

Math

The 'map' you see on the screen is actually a sound field map of an ambisonic encoder, so I've made my first step in having a Ambisonic Controller. Cheers!

Monday, February 8, 2016

Polyphonic Arduino Tone Generation With Mozzi Library

Last semester I took Dance Related Arts and had the opportunity to build three instruments that were incorporated as stage pieces in our performance. The theme for my group was to explore the impact of internet surveillance and the increasing presence of social media and technology in our lives. One of the instruments I built was an array of 5 photocell resistors that each generated their own tone due their respective brightness. In order for the instrument to live on stage I had to either develop a bluetooth/wifi system for sending sensor data, or figure out a way to achieve sound synthesis/amplification directly on the Uno board. I chose the second. Here are a couple clips:

In order to synthesize multiple tones and have advanced control over their parameters, I used a library called Mozzi. Mozzi opens the Arduino environment up to things like multiple oscillators, envelopes, filtering, delay and reverb. It supports sensors like piezos, photocells and FSRs right out of the box and can be easily modified for any other sensor/trigger mechanism.

My final design was an array of 5 photocells hooked into an Uno that was amplified via a 1/8inch connection to a JamBox.

Here is the library : http://sensorium.github.io/Mozzi/

Friday, February 5, 2016

Looks Great On Paper!

As so often happens when you close the gap between idea and reality, it's the little banal details that start to turn into big problems; molehills become mountains. The current design I'm working on is no different. That being said, I've never had a prototype go as smoothly from conception to an object in hand as I have this time; chalk that up to the printing technology which proved its main claim to fame for fast prototyping. So, without further ado...

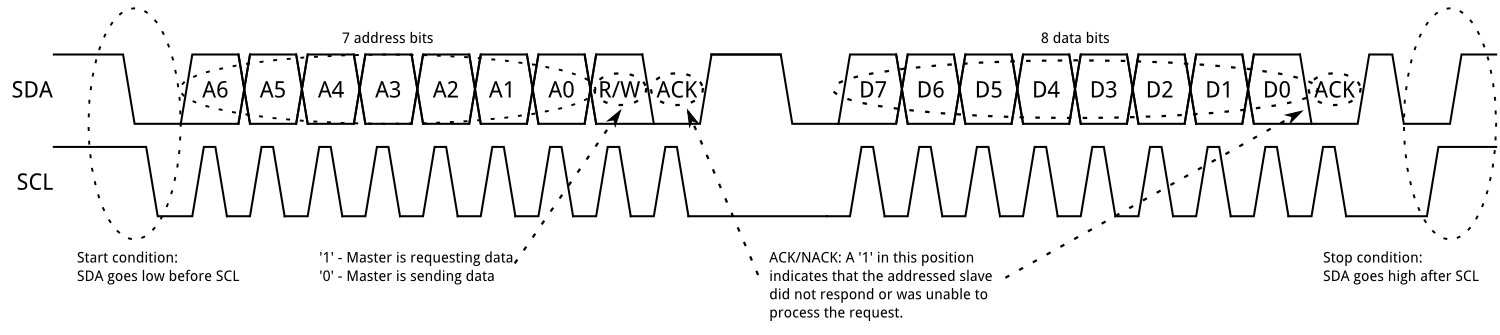

The i2c bus works like a charm! I've wanted to try out sensors using i2c for a while, and this was a perfect opportunity. The i2c bus is an old but proven technology that uses a data line and a clock line (SDA and SCL) to move information through your circuit. So instead of running a bunch of wires back to the microcontroller for each sensor reading, you only send these two wires. On each sensor, or slave, device there is a small addressing microcontroller, and the i2c protocol sorts all of it out for smooth sensor data from multiple devices over minimal material. The i2c works by varying the length of data pulses around the steady clock pulses. Found a informative graphic on Sparkfun:

So first, the good things. It's great to have the object in my hand, to be able to play with it and feel it's strengths and weaknesses. The interaction feels much as I imagined it, and the scale to the human hand is just about right. The assembly was fairly straightforward and how I imagined it...no 'oops I didn't think of that.' Also, I was able to get it into the hands of my design focus group (my wife) for immediate evaluation and feedback. For a first iteration, I'm very satisfied. Emphasis on first iteration.

The bad things. Because of the nature of the 3d printing process (I still prefer the word Stereo-lithography, but for the sake of clarity I'll go the more prosaic route), both the housing and the plunger are covered with tiny, horizontal ribs. This makes the action in and out rough, though I imagine it will get better with time as use smooths out the inside. I could have also spent a few hours sanding it, but I decided that I wanted to get it assembled and out for a test drive before I committed to putting that time in. I used elastic cord for the compression elements, and I think I would rather use metal springs as the cord is just not strong enough to provide a satisfying resistance. I'm also a little concerned that the plunge depth is not deep enough to have a wide range of sensor data that will be needed for maximum expressiveness. The solution of course is to make the housing cylinder longer, but then the device starts to move out of the 'hand sized' scale that I was trying to adhere to. I also think the ring could be a little larger, so in the next iteration I'm going to have to strike a balance between those two opposing concerns. The results of the focus group testing proved inconclusive. When she first interacted with it she didn't roll it around on the ring like I envisioned. I thought the design just begged for that motion, but apparently it didn't. All is not lost, it may become more apparent when there is feedback (sound) hooked up to the interface and the movement is tied to that.

Moving over to the electronics department...

The i2c bus works like a charm! I've wanted to try out sensors using i2c for a while, and this was a perfect opportunity. The i2c bus is an old but proven technology that uses a data line and a clock line (SDA and SCL) to move information through your circuit. So instead of running a bunch of wires back to the microcontroller for each sensor reading, you only send these two wires. On each sensor, or slave, device there is a small addressing microcontroller, and the i2c protocol sorts all of it out for smooth sensor data from multiple devices over minimal material. The i2c works by varying the length of data pulses around the steady clock pulses. Found a informative graphic on Sparkfun:

Adafruit supplied the chips and as usual a super friendly library and scads of documentation. It turns out that they supply so many different sensors and upgrades to their old sensors that they have come up with a meta-library to handle reading the sensors for all of their products. It's called the Unified Sensor Library and it makes getting data as easy as calling a member function of a declared object.

Now that I've got the data, I've got to figure out what to do with it. XYZ orientation data is the obvious choice, and I don't really even need the X data, which would tell me which way the object is turned from magnetic north. I'll end up mapping the Y and Z data to the unit circle on a polar as opposed to cartesian graph, and then adjusting the scale of the graph based on the depth of the plunger in order to compensate for different readings for different elevations of the plunger. Or something like that, my math is a little rusty in this area so I'm just going on hunches at this point. But it looks good on paper!

Wednesday, February 3, 2016

Sound Inspiration

I believe it was Spencer who brought up http://youarelistening.to/chicago in John Granzow's Performance Systems class. On the site, you have the opportunity to listen to live police scanner feeds from various cities while an ambient music playlist plays in conjunction with the police scanners.

I think there's a nice juxtaposition between the very calm, ambient music and the crackles of the police scanner. In class we delved into how there's an interesting philosophical element with the violence/chaos usually associated with police scanners and how it can almost become just background noise, particularly when played with the ambient playlist.

I could delve into more philosophical detail, but I think I'll save that for another time!

Anyway, I like that kind of juxtaposition in sound, with the smooth ambient background being...interrupted? by the police crackles, and it's something I'm taking into consideration with my own sound design and sound space.

I think there's a nice juxtaposition between the very calm, ambient music and the crackles of the police scanner. In class we delved into how there's an interesting philosophical element with the violence/chaos usually associated with police scanners and how it can almost become just background noise, particularly when played with the ambient playlist.

I could delve into more philosophical detail, but I think I'll save that for another time!

Anyway, I like that kind of juxtaposition in sound, with the smooth ambient background being...interrupted? by the police crackles, and it's something I'm taking into consideration with my own sound design and sound space.

Tuesday, February 2, 2016

Dulcimer influences on a gurdysian design

Through continued sketching of the Gurdysian Manipulator, I found myself drawing inspiration from Appalachian dulcimers for the left hand control layout.

Like a hurdy gurdy, the Appalachian dulcimer is modally keyed. Playing a melody on a dulcimer looks (and often sounds) similar to playing a melody on a hurdy gurdy: one reaches over the body/neck to play monophonic lines while the other hand excites all the strings (...usually). This design construct could be useful in the case of a gurdy inspired digital instrument.

I'm considering using 3 linear soft potentiometers (+ linear FSRs?) in a string-like configuration to set boundaries for looped audio material. This could allow for one hand control of a loop's beginning and end as well as a "set" function. Perhaps these could also be used to control effects if I design it to be multimodal.

I'll post a sketch here within the week. If this works, I think I'll change the name from Gurdysian Manupulator to Gurdulcimator.

Like a hurdy gurdy, the Appalachian dulcimer is modally keyed. Playing a melody on a dulcimer looks (and often sounds) similar to playing a melody on a hurdy gurdy: one reaches over the body/neck to play monophonic lines while the other hand excites all the strings (...usually). This design construct could be useful in the case of a gurdy inspired digital instrument.

I'm considering using 3 linear soft potentiometers (+ linear FSRs?) in a string-like configuration to set boundaries for looped audio material. This could allow for one hand control of a loop's beginning and end as well as a "set" function. Perhaps these could also be used to control effects if I design it to be multimodal.

I'll post a sketch here within the week. If this works, I think I'll change the name from Gurdysian Manupulator to Gurdulcimator.

3D Printing an Arduino Mount

I've had the opportunity in PAT 461 to 3D print some objects for my first instrument. I printed a Arduino Uno mounting bracket and a table. Unfortunately the table I made wasn't a full solid and skipped one of the legs.

Here are some images of the two parts:

The Arduino Uno mounting bracket was made by a user on SketchUp's 3D Warehouse community and fits the board well. I've enjoyed making models and being able to confirm that an Arduino board will be able to integrate with it. Prototyping instrument designs in CAD is fast and now a viable option for developing Arduino based devices.

STL file for bracket here: https://umich.box.com/arduinounostl

Here are some images of the two parts:

The Arduino Uno mounting bracket was made by a user on SketchUp's 3D Warehouse community and fits the board well. I've enjoyed making models and being able to confirm that an Arduino board will be able to integrate with it. Prototyping instrument designs in CAD is fast and now a viable option for developing Arduino based devices.

STL file for bracket here: https://umich.box.com/arduinounostl

Saturday, January 30, 2016

Thinking about strings

I found this instrument very fascinating. Kevin have you seen this?

IT SOUNDS BEAUTIFUL.

I think what I enjoy so much about it is the acoustic nature of it. But I do wonder what kind of sounds you could synthesize or create from amplifying the actual string resonance.

Such an interesting instrument. Wondering what the other pedal does, and exactly how the strings are excited specifically.

Initial Design Finished and ready for printing, some thoughts on sound...

One of my favorite things about this semester is finally having the opportunity (and the reason) to wrap my head around Computer Aided Design. While I could have used it before in many instances, its use was never imperative because I didn't have the concurrent Computer Aided Manufacturing capabilities...this has changed now that we have generous access to multiple stereolithographs, or as they are more prosaically and less poetically called, 3D printers.

While we have access to the top of the line design software in the Labs at Michigan, specifically the industry standard Solidworks, I instead decided to start working with the Autodesk Fusion360 software. This was mostly so that I, because I'm a commuting student, didn't need to be tied to the computer labs and I could work on my designs at home. Another reason was that Fusion360 has a super generous three years free student license and much more affordable rates after that. I didn't want to dive too deep into Solidworks, fall in love, and then not get to use it after graduation because of the $3500+ license fee.

I have found Fusion360 to have a very easy, almost curated, learning curve. It comes with a huge host of 'in application' hands on tutorials and a comprehensive online documentation. It also automatically works in the cloud, making sharing and backups as easy as using social media. So, all that being said, here is what I've come up with so far...

After our last in class review, I've tried to do some thinking about what 'sound' the bucky would be tied to. This wasn't my focus so much in my first conception, I was more attentive to various design concerns. I wanted several factors; something that could use as many affordances as the shoulder-arm-elbow-wrist-finger combination allows (impossible to get them all, our hands are amazing devices!), something that would invite touch, and something that would make understandable musical changes right out of the box while allowing for creative growth. As I think about sound, I'm still drawn to thinking about 'What are the controlled parameters?' as much as I'm thinking about a particular synthesis patch. For instance, combining data from the two sensors, I can get Y-axis velocity data from the ITU and get distance data from the proximity sensor. This could lead to an interaction that has a metaphor in the pizz to arco range on a string instrument. The ITU data would set the initial attack time of the sound with faster movement meaning shorter attack, and the depth of the plunger would set the initial attack level, deeper being louder. So a fast descent of the Bucky with a shallow compression of the plunger would be a quiet pizzicato; light compression of the plunger with almost no Y-axis movement would be a soft arco; fast Y-axis with deep compression would be accented sustained attack, etc. This, of course does not have to be tied to volume envelope, it could be transferred to the filter, to LFO, whatever we usually would tie to an ADSR in the modular synth paradigm.

While we have access to the top of the line design software in the Labs at Michigan, specifically the industry standard Solidworks, I instead decided to start working with the Autodesk Fusion360 software. This was mostly so that I, because I'm a commuting student, didn't need to be tied to the computer labs and I could work on my designs at home. Another reason was that Fusion360 has a super generous three years free student license and much more affordable rates after that. I didn't want to dive too deep into Solidworks, fall in love, and then not get to use it after graduation because of the $3500+ license fee.

I have found Fusion360 to have a very easy, almost curated, learning curve. It comes with a huge host of 'in application' hands on tutorials and a comprehensive online documentation. It also automatically works in the cloud, making sharing and backups as easy as using social media. So, all that being said, here is what I've come up with so far...

The top compartment is made to hold all of the electronics, with a proximity sensor mounted on the bottom of the removable disk and the Inertial Tracking Unit mounted on the top. As both of my sensors are i2c there will be a minimal amount of wiring in the housing as I believe I can daisy chain power and data, hopefully coming out of the device with a clean four wire + shield cable. Later on I want to look at modifying the electronics to include Bluetooth, but that's for an iteration far in the future. I do not consider this the finished geometry for the project, this is more proof of concept, and it was designed with the Cube2 printers in mind, which only have an effective print surface of around 4.5". While I believe the height, at around 4.5" assembled and at rest is about right for the scale of the human hand, I would like to extend the ring out another two inches or so. Now that we have access to the department printers, this will be in my next iteration because I believe there is around an eight inch print surface.

The new departmental printers! I've been working with John Granzow a bit in the setup and calibration of the two printers, and we've done some trial runs of simple parts. There is a lot of combinations of variables that must be taken into account, between the material, nozzle, heat, travel speed etc, but they should be up and running in a little while. Here's some vid of a test run. I love the music of the stepper motors!

Friday, January 29, 2016

The Alienation of Ornamental Interaction

In reading Johnston's "Designing and evaluating virtual musical instruments: facilitating conversational user interaction" for our discussion on Thursday, I was very glad for the distinction he made between instrumental and ornamental interaction. Applying it to my own project of the income inequality data-driven percussion, it's clear to me that my current design encourages (or even mandates) an ornamental mode of interaction. Currently, income-inequality data structures the mapping of percussive hit to audio processing, with the mapping changing over the course of the composition. What struck me most about this realization was that this mode of interaction would may cause the user to feel some kind of alienation by my design, as studied by Johnston's qualitative experiment. For example Musician J is quoted as saying: "If you want a feeling of domination and alienation, that's certainly there with that one. I'm not being sarcastic. If you want the feeling that the machine actually is the dominant thing then that creates it quite strongly...It's very strong, the feeling of alienation makes me uneasy. And if it's in a different section of long piece then it certainly creates tension."

I am going and back and forth with how directly I want to elicit or not elicit this kind of emotional response from the user. On the one hand, a reading of the concept of the piece would be reinforced by a feeling of alienation, which mirrors the middle class's feeling of alienation from the wealth of American society. On the other hand, I generally don't like art works that direct user responses so explicitly, and that is something worth interrogating. If an art work has such a dictatorial approach, a subject might find it less engaging, because the work of figuring out the metaphor/message would already be given. Furthermore, in such a political work, I'm worried about reducing a very politically charged topic to trivial mappings. What's more interesting to me is the possibility of creating a space that suggests a feeling of alienation, that then triggers the user to reflect and explore where that alienation occurs in their lives away from my musical interface- in other words, to put the pieces together for themselves, and in a politically productive fashion. That will be quite a hard challenge, but no one ever said this project would be easy!

I am going and back and forth with how directly I want to elicit or not elicit this kind of emotional response from the user. On the one hand, a reading of the concept of the piece would be reinforced by a feeling of alienation, which mirrors the middle class's feeling of alienation from the wealth of American society. On the other hand, I generally don't like art works that direct user responses so explicitly, and that is something worth interrogating. If an art work has such a dictatorial approach, a subject might find it less engaging, because the work of figuring out the metaphor/message would already be given. Furthermore, in such a political work, I'm worried about reducing a very politically charged topic to trivial mappings. What's more interesting to me is the possibility of creating a space that suggests a feeling of alienation, that then triggers the user to reflect and explore where that alienation occurs in their lives away from my musical interface- in other words, to put the pieces together for themselves, and in a politically productive fashion. That will be quite a hard challenge, but no one ever said this project would be easy!

Thursday, January 28, 2016

Initial ideas...and some problems.

As we move along this semester, I thought I'd present my current working idea and some of the issues that I'm working on resolving. So I was initially trying to work on a cube-shaped instrument (kind of like a Rubik's cube) that allowed me to take its individual cubes and pull them out and orient them around its axis' to control sound. However, after finding some not-so-great cube-shaped instruments and not being able to solve how to measure the distance from the main cube, I have totally abandoned that idea.

Instead, I was drawn to these hanging lights that you always seem to see in super hip cafe's and bars.

I was also perusing my favorite design site and stumbled across Phillip K. Smith III's "Double Truth", which explores shadow and light as it falls on objects. Here's a selection from a gallery showing:

So where am I headed from here? I haven't done a whole lot with photoresistors, so I'm experimenting with how dynamic they can be. I also plan on experimenting with different kinds of lights at different wattages. I'm also looking at how to best hang these lights from a frame, and what kind of weight the frame would need to be able to carry depending on what light I go with.

Aaaand...that's the plan!

Instead, I was drawn to these hanging lights that you always seem to see in super hip cafe's and bars.

I was also perusing my favorite design site and stumbled across Phillip K. Smith III's "Double Truth", which explores shadow and light as it falls on objects. Here's a selection from a gallery showing:

The combination of the hanging lights and this play of shadow and light made me think of a system that explores the interplay of shadow and light. I envision an apparatus that looks something like this:

I imagine a series of lights or lamps hanging from a frame shining down photoresistors. After some thought of how to visually engage and show the interaction more, I'm drawn to the idea of mounting the photoresistors on geometric shapes (akin to the random shapes on the wall in Smith's work). I toyed with the idea of using random old objects, but if I use already existing objects, I'll impose a visual/aesthetic/sonic/contextual identity on the system I create. If I use "random" geometric shapes (which I'm thinking of 3D printing), I'll have more control over the shadows I create without imposing an identity on the work--I'm thinking it'll bring more focus to this idea of shadow and light. Maybe if I create cavities for cylinders with varying "tops" to put underneath the lights that I can rotate and control the shadows, but not necessarily the position of these shapes. Hmm..things to think about.

As for the lights themselves, I have a two light system in mind (with 4 lights, so two of each). One set of lights will simply hang from the frame, and I'll be able to make them swing and create continuously changing light conditions. The other lights I'll be able to bend like desk lamps and have greater control over what those lights are doing and what shadows they create.

Peter suggested that I find a way to actuate the lights so that I have more control over when each light is on. I really, really like that idea, but it'll definitely take more research than I've done in that department. I want to be able to tap a light to turn it on and then tap it again to turn it off.

I really like this whole idea because not only do I have a sonic identity that I can hear in my head, but there's also a learning aspect to it. It'll take me (and whoever else chooses to engage in this system) time to learn how the shadows, lights and varying placements of the photoresistors will influence the sound. There'll also be a learning aspect whenever the system is placed somewhere new--I'll have to adapt to the new lighting conditions of the room.

So where am I headed from here? I haven't done a whole lot with photoresistors, so I'm experimenting with how dynamic they can be. I also plan on experimenting with different kinds of lights at different wattages. I'm also looking at how to best hang these lights from a frame, and what kind of weight the frame would need to be able to carry depending on what light I go with.

Aaaand...that's the plan!

Wednesday, January 27, 2016

More bowing inspiration: The nail violin

Tuesday, January 26, 2016

String Potentiometers Revisited

Last year I spent a lot of time researching GameTrak string potentiometer technology and ways to use it.

Although I'm not planning on using string potentiometers again this semester, I've gone back to researching GameTraks again for a redesign of the String Accordion. I've specifically been working on finding alternative sources for my string potentiometer needs, thinking about building them out of easily attainable parts rather than fishing them out of GameTraks.

A new GameTrak design came out in 2006 just before the company was bought out, right before the Wii revolution of wireless motion sensing remotes. The string based system was eventually dropped for a Wiimote clone that was obviously unsuccessful. Unfortunately, the last tech update to GameTrak before it jumped over to wireless was a really nice step up from the original.

This was a brilliant change in design for GameTrak. Instead of having the plastic flange for the PCB to sit on, the new design had it screwed directly into the spring enclosure. It boasts a smaller footprint and easily accessible wires via a smaller, new PCB by the string length potentiometer. It's everything I'm looking for in a string potentiometer system, but they only ran a short production in the UK in 2006. UGH.

All that's left is here: http://www.amazon.co.uk/Mad-Catz-Gametrak-Controller-PS2/dp/B000B0N448

Even with that, I can't guarantee that those are the right type. The new GameTraks look like they're lumped in with the old ones, and I'd hate to pay premium shipping to get the same old GameTraks I already have.

In researching ways to build my own GameTrak system, I've found that it's fairly simple to measure string length. Quadrature on a tape measure type system would do the trick, but the real tough part is measuring the XY position like the GameTrak does. I've scoured the internet and I still haven't found a viable alternative.

I'm considering an attempt to get in contact with the engineer accredited to this design. I wonder if he knows how useful his contraption is to the design world right now?

Although I'm not planning on using string potentiometers again this semester, I've gone back to researching GameTraks again for a redesign of the String Accordion. I've specifically been working on finding alternative sources for my string potentiometer needs, thinking about building them out of easily attainable parts rather than fishing them out of GameTraks.

A new GameTrak design came out in 2006 just before the company was bought out, right before the Wii revolution of wireless motion sensing remotes. The string based system was eventually dropped for a Wiimote clone that was obviously unsuccessful. Unfortunately, the last tech update to GameTrak before it jumped over to wireless was a really nice step up from the original.

This was a brilliant change in design for GameTrak. Instead of having the plastic flange for the PCB to sit on, the new design had it screwed directly into the spring enclosure. It boasts a smaller footprint and easily accessible wires via a smaller, new PCB by the string length potentiometer. It's everything I'm looking for in a string potentiometer system, but they only ran a short production in the UK in 2006. UGH.

All that's left is here: http://www.amazon.co.uk/Mad-Catz-Gametrak-Controller-PS2/dp/B000B0N448

Even with that, I can't guarantee that those are the right type. The new GameTraks look like they're lumped in with the old ones, and I'd hate to pay premium shipping to get the same old GameTraks I already have.

In researching ways to build my own GameTrak system, I've found that it's fairly simple to measure string length. Quadrature on a tape measure type system would do the trick, but the real tough part is measuring the XY position like the GameTrak does. I've scoured the internet and I still haven't found a viable alternative.

I'm considering an attempt to get in contact with the engineer accredited to this design. I wonder if he knows how useful his contraption is to the design world right now?

Saturday, January 23, 2016

Friday, January 22, 2016

Whoa Radio Drum Reboot at NAMM...Nice Stuff from former Trinity College Dublin....

Not really a radio drum per se, but the same outcome.

http://www.synthtopia.com/content/2016/01/22/aerodrums-intros-virtual-reality-drum-set-for-oculus-rift/

http://www.synthtopia.com/content/2016/01/22/aerodrums-intros-virtual-reality-drum-set-for-oculus-rift/

The Data-Driven DJ and the Dangers of Style

I'm focusing my design towards the sketch of a data-driven percussive instrument that I presented in class a week ago, and in my research I've come across an interesting data-driven musician I'd like to share with you. He goes by the name the Data-Driven DJ, real name Brian Foo, and is a conceptual artist/programmer. He has quite a few projects already, each focusing on a different data set, including one on income inequality along a NYC subway line. Click to watch

His visualizations are beautiful, and well synchronized with the presentation of data. However, I find that his musical mappings vary from imaginative to not interesting, and though he admits that he has formal musical training and is getting better with each project. He intentionally restricts himself to a sound world within each project (though) and often relies on musical gestures and sounds for his mappings that together connote a distinct and pre-existing style (popular music, or in the case above, Steve Reich). While this might make his work aurally accessible, the reliance on pre-existing styles makes his work predictable across the span of each composition, and reduces the drama within each data-set. E.g. in the subway project above, the sounds signifying areas with high median income aren't really that different from lower median income areas. If his purpose was to orient the listener towards the sharp contours of the dataset, I think relying on a pre-existing style took away the potential for shock at any particular datapoint.